In a significant advancement within the artificial intelligence community, Apple has released a comprehensive study on its latest creation, MM1, a Multimodal Large Language Model (MLLM) that integrates text and image data with remarkable effectiveness. Published on March 14, 2024, this research paper provides an in-depth look at the development of high-performing MLLMs, emphasizing the pivotal architecture components, data selection, and training methodologies that drive MM1’s impressive outcomes.

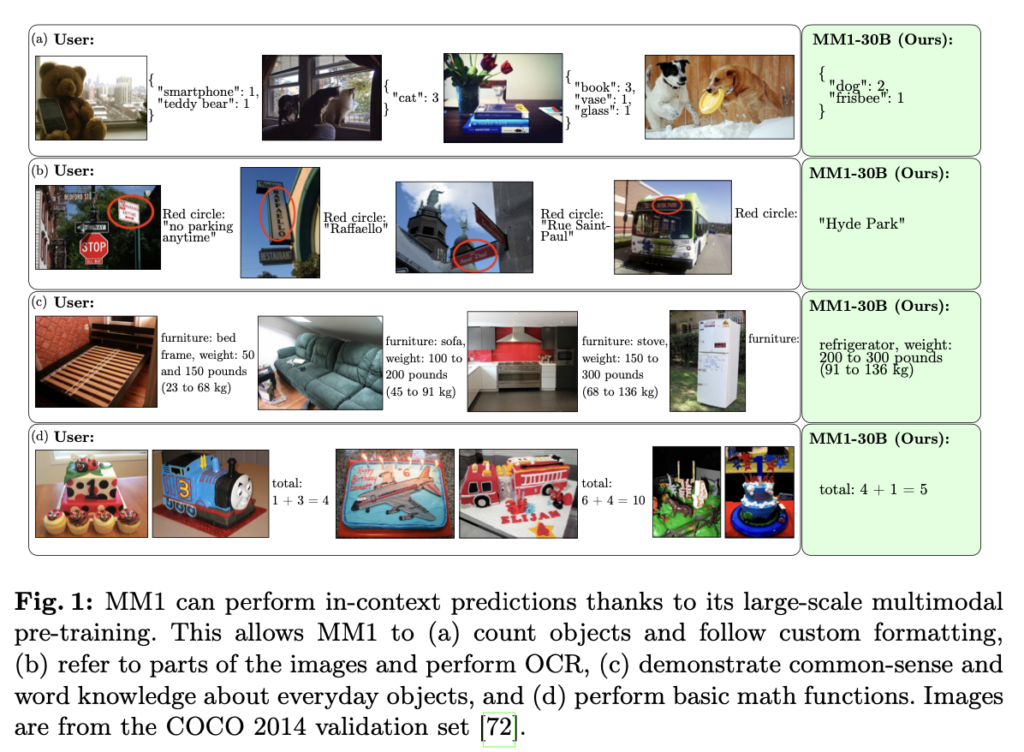

MM1 distinguishes itself by mastering few-shot learning, a technique where models learn from a limited dataset, across diverse benchmarks. This ability demonstrates MM1’s superior comprehension and reasoning, allowing it to execute tasks like object counting, image-based question answering, and intricate reasoning with minimal examples.

The research underscores the crucial role of carefully chosen image-caption, interleaved image-text, and text-only data in reaching top-tier results. Interestingly, the paper reveals that while the image encoder’s features such as resolution and token count significantly influence performance, the architecture of the vision-language connector has a minimal effect.

MM1’s scalability is another highlight of the study. Apple explored different model sizes and utilized a mixture-of-experts (MoE) strategy to scale MM1 to an impressive 30 billion parameters. This approach not only solidifies MM1’s dominance in pre-training evaluations but also ensures its strong performance in supervised fine-tuning across well-established multimodal benchmarks.

Equipped with large-scale multimodal pre-training, MM1 exhibits advanced in-context learning and multi-image reasoning, facilitating few-shot chain-of-thought prompting. Such features enable MM1 to process complex queries by simplifying them into more manageable tasks.

Apple’s MM1 potentially competes with OpenAI's ChatGPT by introducing a more integrated approach to understanding and generating content that combines both textual and visual information. While ChatGPT excels in generating human-like text based on large datasets of textual information, MM1's ability to incorporate and interpret visual data alongside text positions it as a formidable contender in the evolving landscape of AI technologies. This multimodal understanding could pave the way for applications where ChatGPT's text-only approach may fall short, such as in areas requiring nuanced analysis of both visual and textual data.

The release of MM1 by Apple contributes significantly to the artificial intelligence domain, offering a detailed roadmap for the development of future MLLMs. By sharing the insights and design principles gleaned from MM1, Apple not only challenges the current capabilities of models like ChatGPT but also invites the broader AI community to build upon their findings, potentially leading to more sophisticated and capable AI systems.

With MM1, Apple marks a significant step toward bridging the gap between textual and visual data processing, enhancing the potential for AI applications across various sectors and setting a new benchmark for multimodal AI technologies.

Stay informed with The Breaking AI, where we bring you the latest and most significant AI news in bite-sized portions. Tune in next week for more updates from the forefront of artificial intelligence research and development.