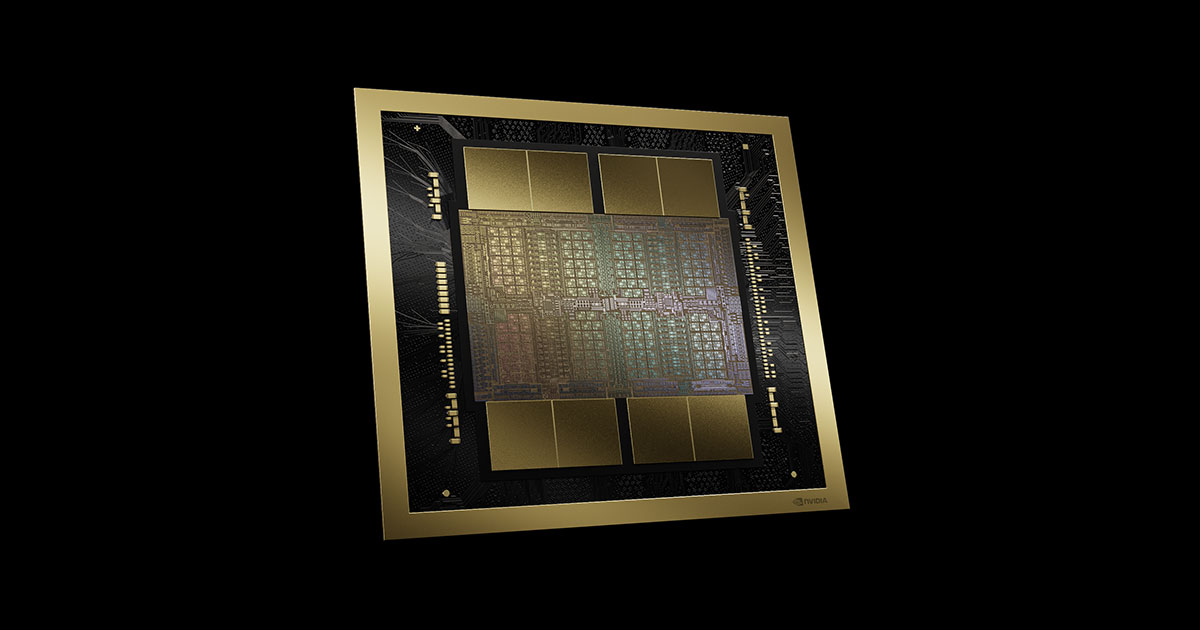

NVIDIA has once again solidified its dominance in the AI industry with the debut of its Blackwell AI chips at MLPerf v4.1, shattering performance records across all benchmarks. This milestone marks another chapter in NVIDIA’s relentless pursuit of AI excellence, as the Blackwell architecture proves to be the strongest AI solution on the market, delivering up to a 4x increase in generational performance.

NVIDIA’s Unmatched Performance Across AI Benchmarks

In this latest round of MLPerf Inference benchmarks, NVIDIA’s Blackwell AI chips have set new records, outperforming all competitors. The benchmarks include a range of AI tasks such as Dense LLM (Llama 2 70B), Sparse Mixture of Experts LLM (Mixtral 8x7B MoE), Text-to-Image (Stable Diffusion), Recommendation (DLRMv2), Natural Language Processing (BERT), Object Detection (RetinaNet), Dense LLM (GPT-J 6B), Medical Image Segmentation (3D U-Net), and Image Classification (ResNet-50 v1.5).

In the Llama 2 70B Dense LLM benchmark, a single Blackwell GPU delivered a 4x increase in performance in server workloads and a 3.7x increase in offline scenarios compared to its predecessor, the Hopper H100. This significant performance boost is further enhanced by NVIDIA’s first publicly measured performance using FP4 on Blackwell GPUs.

Hopper H100 & H200: Continuous Optimization and Unmatched Leadership

While the Blackwell chips are capturing headlines, NVIDIA’s Hopper H100 and H200 GPUs continue to dominate the AI landscape. These chips have consistently shown superior performance across various tests, thanks to ongoing optimizations to the CUDA stack. In the latest benchmarks, the Hopper H200, with its 80% higher memory capacity and 40% higher bandwidth, has delivered a 50% performance uplift in the Llama 2 70B benchmark compared to the H100.

In multi-GPU tests, the H100 and H200 GPUs achieved token generation speeds of up to 59,022 and 52,416 tokens per second, respectively, in the Mixtral 8x7B LLM benchmark. Notably, AMD’s Instinct MI300X was absent from these submissions, further highlighting NVIDIA’s unchallenged leadership.

NVIDIA’s Ecosystem: The Crucial Role of Software

NVIDIA’s success isn’t just about hardware—it’s about the entire ecosystem. The company’s continuous software fine-tuning has paid off tremendously, resulting in significant performance boosts with every MLPerf release. This advantage is directly passed on to customers running Hopper GPUs in their servers, making NVIDIA the go-to choice for enterprises and AI powerhouses worldwide.

The company is now rolling out the HGX H200 through various partners, ensuring that its powerful AI solutions are readily available to meet the growing demands of the AI industry.

Edge Solutions and the Future of AI

It’s not just the heavyweights like Blackwell and Hopper that are benefiting from NVIDIA’s relentless optimization efforts. Even Edge solutions such as the Jetson AG Orin have seen a 6x performance boost since MLPerf v4.0 submissions, significantly impacting GenAI workloads at the Edge.

With Blackwell already showcasing such strong performance before its official launch, the new architecture is expected to get even stronger, following the trajectory of Hopper’s continuous improvements. As NVIDIA continues to optimize its ecosystem, the AI industry can look forward to even more groundbreaking advancements in the near future.